You hardly find in 3d-community anyone who doesn’t know of or never tried Maxwell Render. 10 years ago (or 12 years ago, if you count its public alpha release in 2004) giving preference to raytracing, unbiased rendering and physically correct light computation and materials could be life (and career) changing for an ArchViz artist, as it also was for the entire Maxwell Render development team. Nowadays we face a bigger choice of rendering softwares, plugins and of course offline and realtime render engines. We have more powerful computers and overall more advanced technologies that allow to achieve a level of photorealism that we couldn’t even dream of years ago, but nevertheless we can’t consider photorealism both in results and in approach without Maxwell Render.

3D Architettura invited Juan Cañada to speak about the past and the future of Maxwell Render and to take us behind the scenes of this famous render engine.

Exclusive interview

with Juan Cañada,

Head of Maxwell Render,

Next Limit Technologies

Who do you see in the mirror: a programmer with success in computer graphics or an artist who became a software developer?

A mechanical engineer that thought he was going to work in fluids simulation and due to reasons he cannot really remember -it was many years ago, I have a bad memory- he ended up coding a raytracer.

When you started working on Maxwell Render more than 10 years ago, how did you get an idea to develop an unbiased render engine?

Maxwell started more like an experiment rather than a product developed with commercial intentions. We just thought to ourselves, “What if we try to create the most realistic render engine, capable of producing the most beautiful synthetic images ever seen? We will not care about render times, nor implementing many features yet, but we will just work on the correctness of the core and the rest will come later.” That lead to a physically-based render approach using unbiased methods, concepts that are very popular now but were completely alien to people at that time.

What was most difficult while developing first alpha and beta versions?

The biggest internal difficulty was handling the success. At the time we released the first beta we were not ready to handle thousands of users at any level: sales support, tech support, development cycles, testing, release management… changing our whole structure while keeping the project in good shape was a big challenge. Externally there were other difficulties, the main one being that many people were not ready for the shift to physically-based rendering that would come years later.

What could be different for you and for Maxwell Render if 10 years ago crowdfunding possibilities were on their present level of popularity?

I usually do not torture myself with “what if” scenarios but prefer to focus on what is coming next, so I am not sure. I would had loved to put more resources into convincing people that unbiased physically-based rendering was the future, something that at that time few visionaries understood.

When Maxwell Render just appeared, an average computer was not powerful enough and achieving a decent rendering result required a lot of time. How did you persuade your investors and your colleagues that unbiased rendering was still the right approach and the road to the future, as it effectively was?

It took time, as mentioned in the previous question. Some ideas are so disruptive that no matter how much effort you put into convincing people to adopt them, it still needs time. Fortunately, we did not have investors so it just depended on us.

A lot of new render engines have been developed recently that are not completely based on their own technology, but rather use Intel Embree, Cry Engine or others. What are your thoughts, as a developer, about this situation?

I am more interested in render technologies that use their own original approach rather than products that are built on top of an existing framework. Doing this allows very little margin to differentiate yourself if you use Embree or similar tools, whether they are CPU or GPU based.

If you were be creating your render engine from scratch now and had a complete freedom, what would be your approach? What would you do?

I would do what we are doing now, but I am afraid I cannot share anything yet, hopefully in a few months! If all goes as planned, 2016 is going to be a very exciting year.

Next Limit has developed technologies for cinema SFX (Real Flow) and for ArchViz (Maxwell Render), but what about video games and real time? Are there any plans also in these directions?

All 3D artists know Maxwell Render. Some of them in their portfolios write that they use other render engines, while in private conversations admit, that in reality they work in Maxwell Render but prefer to keep as a secret weapon hidden from their competitors. Were you aware of this approach? Do you think that it destroys for you promotional possibilities or, on the contrary, makes stronger the professional image of Maxwell Render?

I have heard that “secret weapon” thing many times, I even have friends myself who do that. It is difficult to say if this is good or bad for us, on the one hand it is cool that Maxwell has this reputation of being a hidden luxury but at the same time we have to feed our families so selling licenses is mandatory. Keep that in mind if you, beloved reader, belong to this “secret weapon” group :)

Judging by forums and questions that you receive, what do you notice as the most common mistake in the usage of Maxwell Render? What is the first thing that you would teach to 3D and visualization students about your software?

I would say that people should not focus on specific algorithms nor tools, because they change quickly. For example, if you are an expert in photon mapping and know how to get rid of all those artifacts but then in the following version of your favorite renderer the implementation changes… you’ll have to re-learn too many things. Many times, I see beginners with a strong “features fever” that are too focused on finding out where X tool is located or what Y tool does; they install hundreds of 3DsMax modifiers or Photoshop filters and get overwhelmed. I think it is more productive and enjoyable to stick to the basics: if you want to learn Maxwell, learn photography. If you want to understand more about the internals, study some optics.

After the project or tool becomes available for a wide audience, there are sometimes situations when people start using it in a way its creator did not expect. Did you encounter something like that? Did Maxwell Render users surprise you discovering in it new unforeseen possibilities?

Indeed, the most surprising and satisfactory situations or unexpected usage of Maxwell have been related to predictive rendering, when our renderer has been used to make decisions at the design stage, to understand how things will look later when they are manufactured. Nowadays this is probably the area where Maxwell shines the most, but in the past it was amazing when we found out people were using it as a decision making tool. I have even seen two designers who designed their wedding rings using Maxwell, that is a big responsibility!

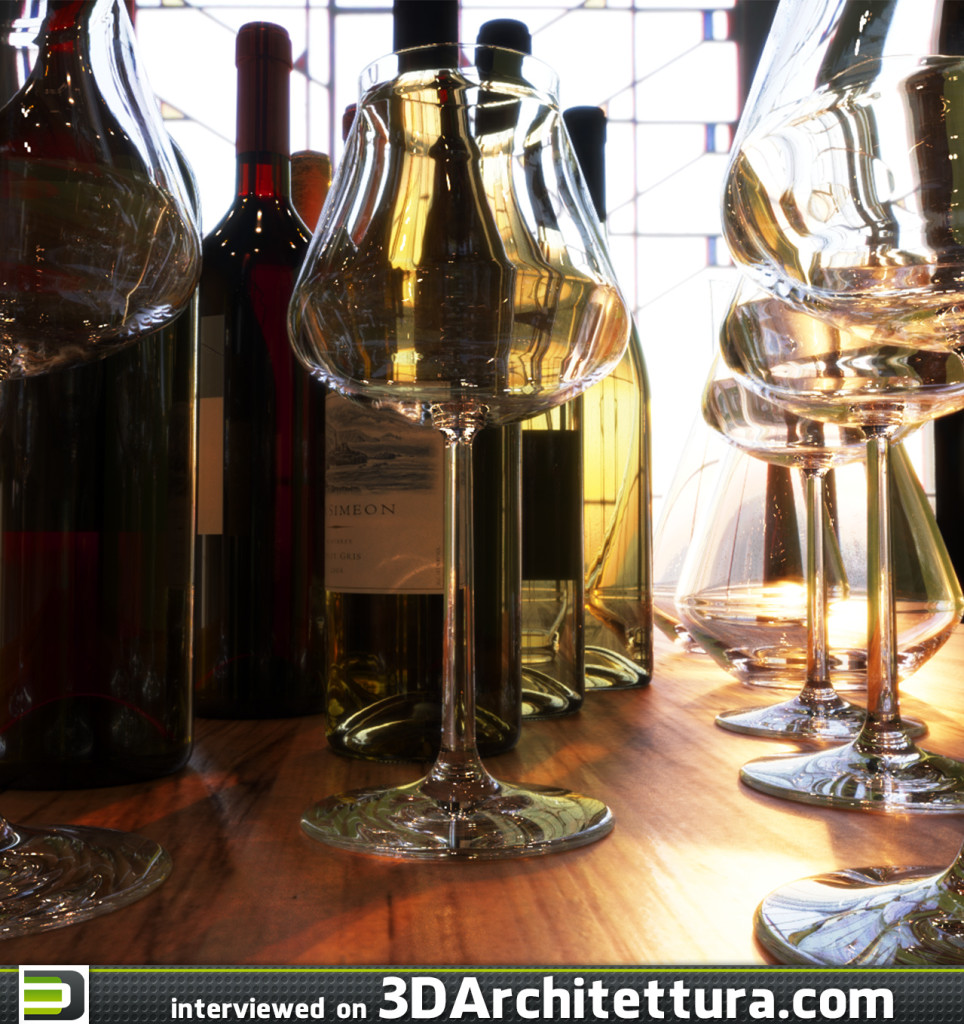

Images courtesy of Maxwell Render